Program development

When the NRC released its bold vision for the future of science education in its 2010 Framework for K-12 Science Education, the science pedagogy leaders at the Lawrence Hall of Science quickly began to consider how the concepts could be implemented in real life classrooms with diverse student bodies. Therefore, when the Next Generation Science Standards were released later, in 2013, the team was ready to respond with alacrity, putting their heads down immediately to get to work creating a brand new curriculum that would be built from the ground up to address the standards. Though the Lawrence Hall of Science already had a bevy of outstanding programs in their portfolio of curricula, the Learning Design Group of LHS chose not to merely redesign an existing program in order to check the NGSS boxes, but instead to honor the pedagogical shift by creating something truly birthed from the new standards. A rigorous research and development process, described in the sections below, thus ensued.

Starting with a research-proven approach

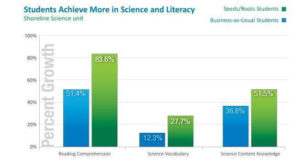

In addition to the guidelines laid out by the Framework, the curriculum developers at the Lawrence Hall of Science brought their literacy-rich, multimodal learning approach of Do, Talk, Read, Write to the table when deciding on initial plans for the new curriculum. This approach, which was used in the Hall’s successful Seeds of Science/ Roots of Reading® program, has been extensively assessed by outside evaluators from the National Center for Research on Evaluation, Standards, and Student Testing (CRESST) at the University of California, Los Angeles, 2005; by Mark Girod at Western Oregon University, 2005; and by David Hanauer at Indiana University of Pennsylvania, 2005. A total of three gold standard studies were conducted on the Seeds of Science/Roots of Reading® approach, in each case using content-comparable, business-as-usual comparison groups. Looking across these three studies at the results of students’ gains in science understanding, students using the Do, Talk, Read, Write approach (i.e. Seeds of Science/Roots of Reading®) consistently outperformed students in the comparison groups on measures of science content knowledge. Some example findings from these evaluations include the following:

Independent Evaluators from the Center for Research on Evaluation, Standards, and Student Testing (CRESST) at University of California, Los Angeles found that second- and third-grade students receiving Do, Talk, Read, Write instruction (via Seeds of Science/Roots of Reading®) significantly outperformed students receiving their usual science instruction in Reading Comprehension, Science Vocabulary, and Science Content Knowledge.

English language learners who experienced Do, Talk, Read, Write instruction (via Seeds of Science/Roots of Reading®) significantly outperformed other English language learners in reading comprehension, science vocabulary, and science content knowledge.

Knowing that Do, Talk, Read, Write was benefiting students, the Lawrence Hall of Science curriculum developers decided to use the approach in their new curriculum, but with the enhancement of adding “Visualize,” which would allow students to learn the practice of modeling and observe scientific phenomena in ways never before possible.

Initial development

With a research-proven approach to science learning established, the authorship team at the Lawrence Hall of Science began an iterative process of outlining, writing, testing, and revising 48 units that would become Amplify Science K–8. In the initial drafts, the Hall considered such facets as what the anchoring phenomena could be; what scientist or engineer role students would assume; how students would go about figuring out the phenomena; and which NGSS performance expectations the unit would target. During the initial development, the authors consulted practicing scientists and incorporated their expert advice into the design of the units. Throughout the process of crafting all of the activities, lessons, and units, the team embraced the following principles, which remain evident in the final curriculum:

- Learning organized around the explanation of real-world phenomena.

- Careful bundling and sequencing of disciplinary core ideas to support deep understanding.

- Meaningful focus on crosscutting concepts (CCCs).

- Thoughtful inclusion and sequencing of science and engineering practices (SEPs).

For more information on how these principles are embedded in the program, please see the “Approach” section.

Pilot testing and revisions

In addition to consulting disciplinary experts as they developed each unit, the Lawrence Hall of Science also worked with students and teachers in and around the San Francisco Bay Area. The developmental pilot tests that the Hall carried out in these classrooms enabled them to collect data and feedback from real teachers and students; to gauge the level of student engagement in the lessons; and to consider lesson length in the context of an actual classroom. Using this valuable information, the team further revised lessons, refining them over time so that they would serve the learning goals and targeted standards of the respective unit, fit within a 45–60 minute class session (depending on the grade), and be authentically three-dimensional and engaging.

Field testing and revisions

After completion of pilot-test-informed revisions, the Lawrence Hall of Science conducted large-scale field tests to test how well the materials worked in the hands of real teachers, and in a wide range of classrooms. More than 475 teachers and 34,000 students in cities, suburbs, and rural communities across the country thus used Amplify Science in their classrooms between 2014–2016. Students who participated benefited from their use of the curriculum. See below for a discussion of some of the insights gleaned from these field tests:

Findings from first-grade field tests

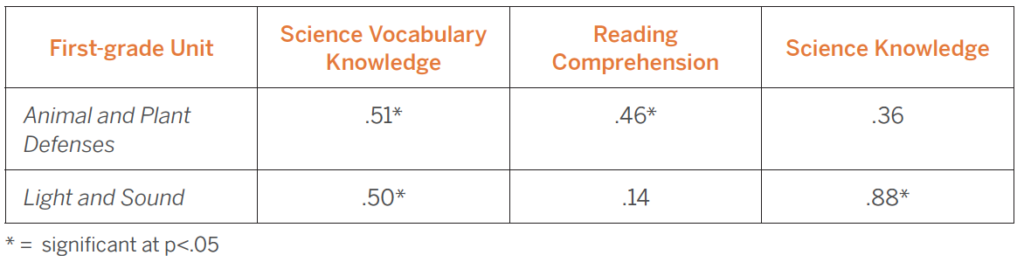

As part of a Department of Education-funded project (First Grade Second Language: Uniting Science Knowledge and Language Development for English Learners), the developers at the Lawrence Hall of Science conducted classroom implementation trials of first-grade Amplify Science units.

Scores on outcome measures for students in the classrooms assigned to teach Amplify Science were compared to scores for a similar number of students in classrooms using a business-as- usual curriculum. The study looked at three measures of performance (unit-aligned assessments of science vocabulary knowledge, reading comprehension of informational texts, and science knowledge), comparing the pre-to-post gains of students in the Amplify unit to the business-as-usual curriculum. In all comparisons, the Amplify Science treatment group outperformed the business-as-usual group, and in some cases by a large margin. The control group never outperformed the treatment group, and the treatment achieved a moderate effect size (.4<ES<.6). The effect size is a measure of practical significance–the magnitude of change between pre-test and post-test. A statistic called Cohen’s d was used, the value of which ranges from 0 (no change) upwards. In education, researchers typically consider effect sizes of 0.4 to be better than average. Effect sizes of 0.6 or greater indicate a highly successful program, and are considered excellent.

Effect Sizes in Classroom Implementation Trial

Importantly, students demonstrated substantial growth in vocabulary knowledge and in their capacity to describe challenging science concepts. Furthermore, when asked, all of the teachers who participated overwhelmingly responded that they would teach the Amplify Science units again if given the opportunity. Positive results and feedback were also received from field tests of all other elementary grades, as well.

Findings from grades 6-8 field tests

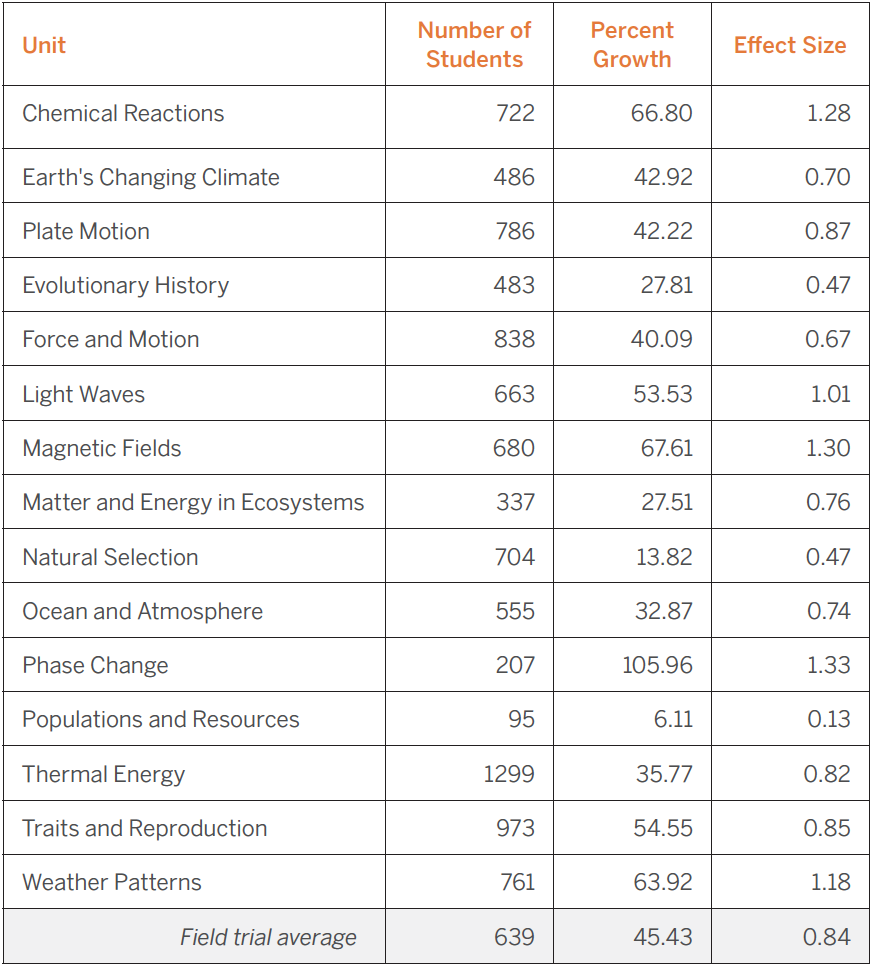

During field tests for each middle school unit, students responded to an assessment prior to instruction and again immediately upon concluding the unit. Each of these pre-unit and post-unit assessments were scored, and student growth was calculated using percent growth and effect size measures (Cohen’s d).

The percent-growth results indicate how much better the class sections did on average between pre-test and post-test. On average, there was a 46 percent growth in student knowledge across the middle school units. Translated into letter grades, that is equivalent to a student increasing two letter grades.

Another way to measure efficacy is by looking at the effect size of the student growth. The effect sizes on student growth during the field tests of Amplify Science middle school units were medium to large. With only one exception (Populations and Resources at 0.129), all units had an effect size on student achievement between 0.5 and 1.4. The average effect size across units was 0.84, which is an excellent outcome.

The percent growth and Cohen’s d effect size measures are given for each unit in the table below. Given these results, the typical teacher might expect student knowledge to increase by 50 percent or more per unit, or roughly a 1–2 letter grade improvement.

Knowledge growth and practical effect sizes

After the field tests were completed, the Lawrence Hall of Science organized the copious amount of teacher feedback and student data (such as the above), and proceeded to revise and rewrite the units, as needed. Each unit underwent several months of revisions, with changes made to lesson content, technology, artwork, media, and kit materials. From initial drafts written in 2014, through the large-scale field tests of 2014–2016, all unit revisions were finally complete for the 2016–2017 school year, just in time for teachers to usher in the new year with a fully-formed, effective, and NGSS designed resources to use with their students.

6. Conduct unit field tests across the United States

More than 475 teachers and 34,000 students in cities, suburbs, and rural communities across the country used Amplify Science in their classrooms between 2014–2016 as part of the development process for the program.

Findings from grades 6-8 field tests

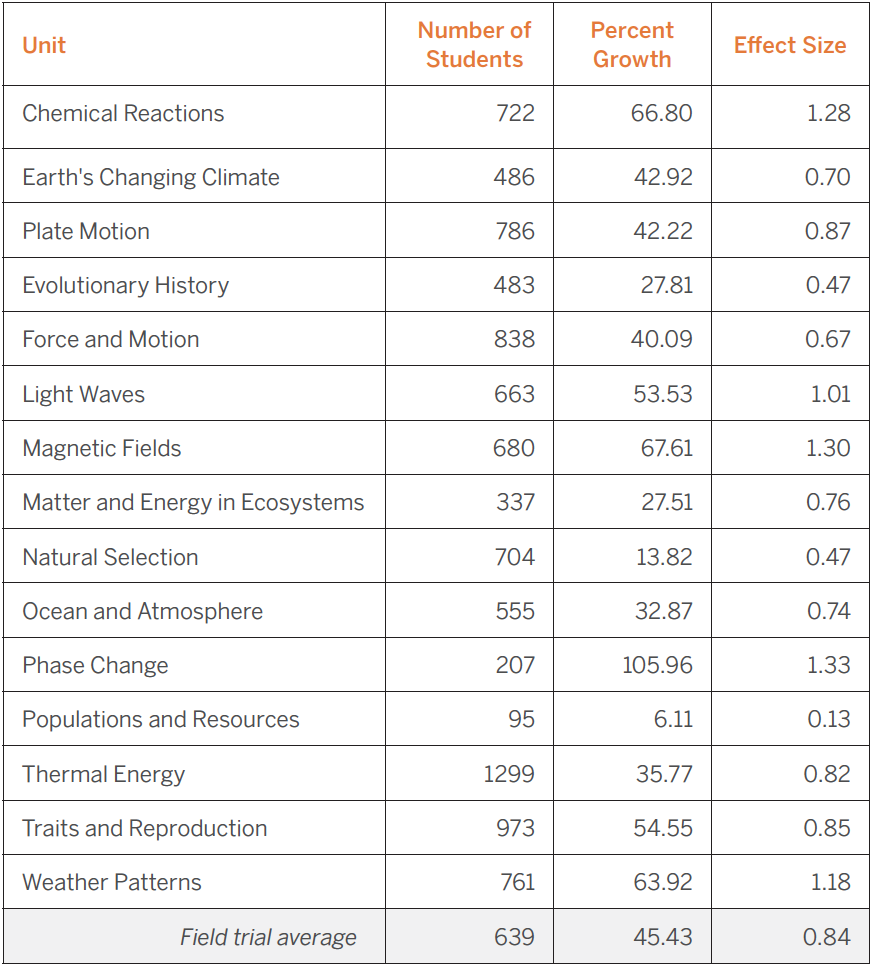

During field tests for each middle school unit, students responded to an assessment prior to instruction and again immediately upon concluding the unit. Each of these pre-unit and post-unit assessments was scored and student growth calculated using percent growth and effect size measures (Cohen’s d).

The percent-growth results indicate how much better the class sections did on average between pre-test and post-test. On average, there was a 46 percent growth in student knowledge across the middle school units. Translated into letter grades, that is equivalent to a student increasing two letter grades.

Another way to measure efficacy is by looking at the effect size of the student growth. This is a measure of the practical significance — the effect size is the magnitude of change between pre-test and post-test. We use a statistic called Cohen’s d, and its value ranges from 0 (no change) and upwards. In education, we will typically consider effect sizes of 0.4 to be better than average. Effect sizes of 0.6 or greater indicate a highly successful program, and are considered excellent.

The effect sizes on student growth during the field tests of Amplify Science middle school units are medium to large. The average effect size across units was 0.84, which is an excellent outcome.

With only one exception (Populations and Resources at 0.129), all units had an effect size on student achievement between 0.5 and 1.4.

The percent growth and Cohen’s d effect size measures are given for each unit in the table below. Given these results, the typical teacher might expect student knowledge to increase by 50 percent or more per unit, or roughly a 1–2 letter grade improvement.

Knowledge growth and practical effect sizes

Recent Results and Ongoing Research

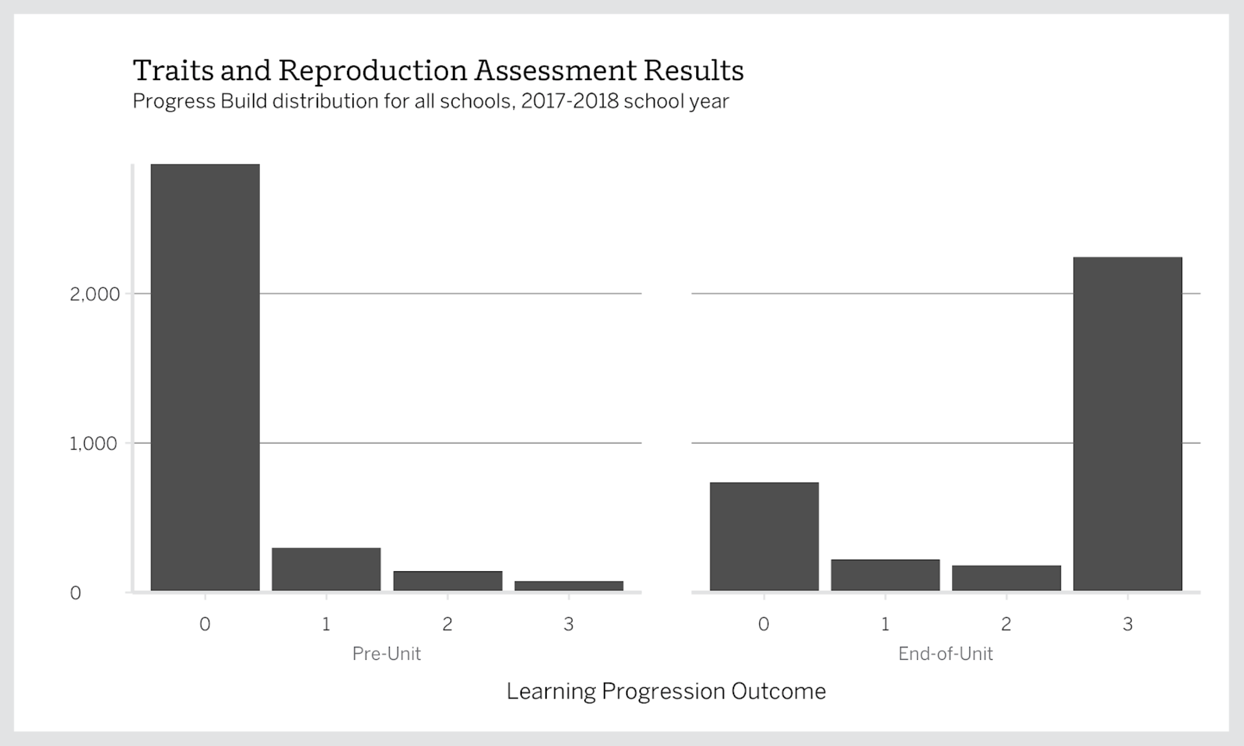

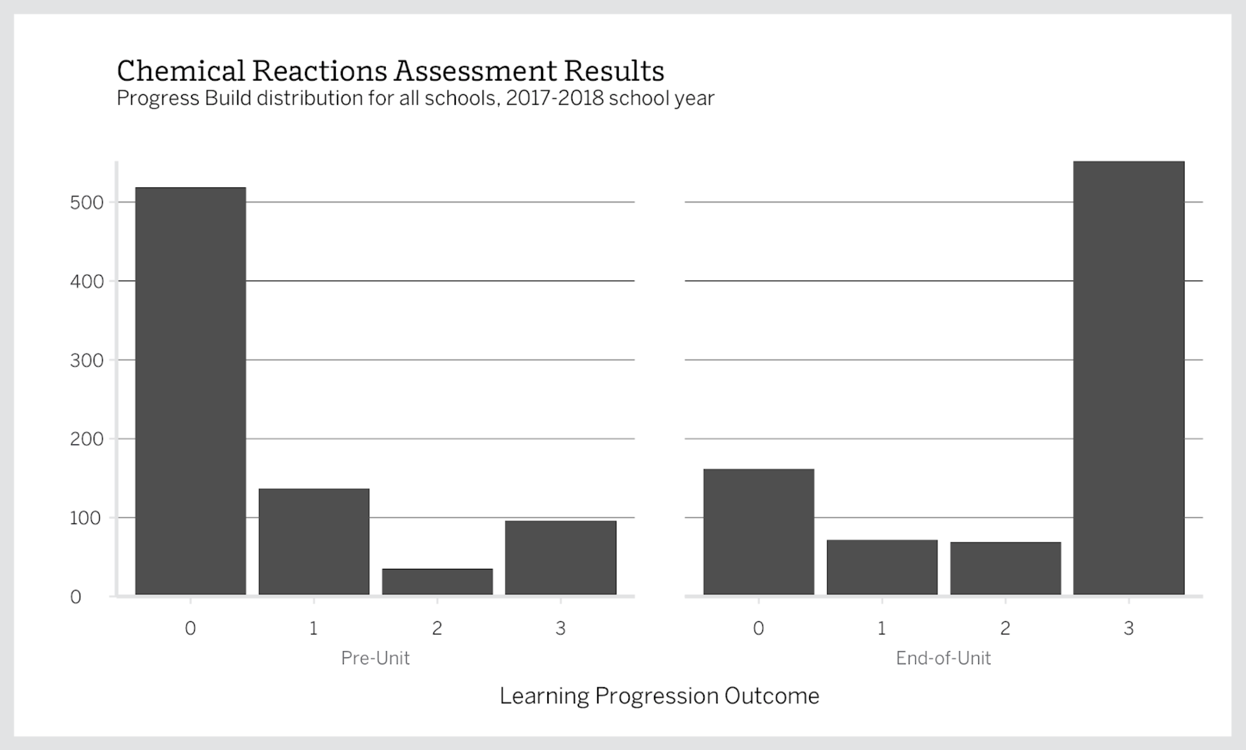

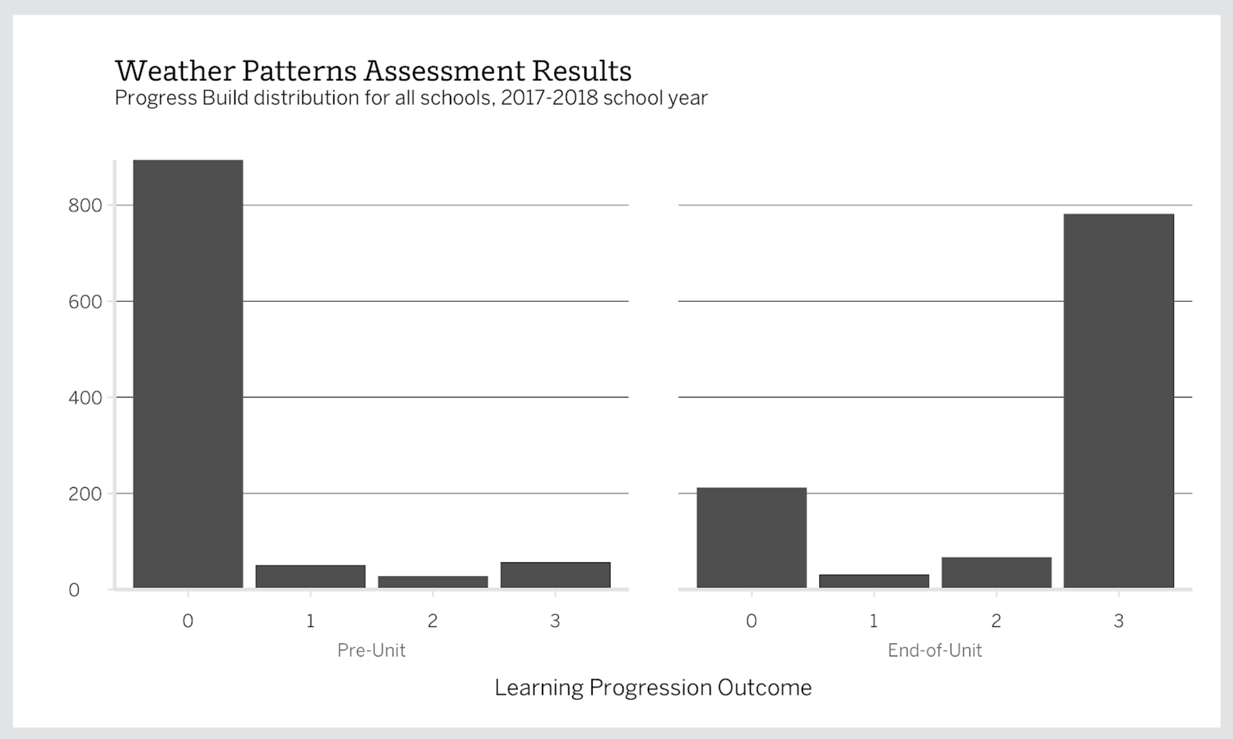

The results below are from classrooms across the country that started implementing the finished Amplify Science curriculum starting in the fall of 2017 or later. The most notable data point, depicted in the graphs that follow, is the number of students who moved from the lowest to highest level in the learning progression (0 to 3 in unit’s “Progress Build”) over the course of the unit (generally 6 weeks):

The charts above show movement through the learning progression over the course of one unit of study. The learning progression in Amplify Science is outlined in what we call the Progress Build.

Additionally, the table below shows average Student Knowledge Growth from the 2016–2017 and 2017–2018 school years, respectively. Student Knowledge Growth is the amount by which percent correct scores between Pre-Unit Assessments and End-of-Unit Assessments increased across the program.

| School Year | Student Knowledge Growth |

|---|---|

| 2016–2017 | 88.63% |

| 2017–2018 (fall semester only) | 89.91% |

As can be seen from the table, relevant outcomes are in the positive direction, indicating a favorable outcome. The suggested effects will be investigated further, but these initial results are highly encouraging.

More plans for researching efficacy are underway, including a randomized control efficacy study in the 2018–2019 school year. This study will be conducted by SRI International, an independent third-party research organization. Preliminary results will be available in 2019.

Continuous Improvements

Not only is the Amplify Science team committed to pursuing relevant research studies, we are also committed to making the NGSS teaching and learning experience as enjoyable as possible, and we work hard to continually improve the curriculum to do so, In fact, one of Amplify Science’s greatest strengths is the ability to continue to develop each year, responding quickly to the needs and feedback of program users. Over the 2016–2017 school year, for instance, Amplify Science adapted and improved numerous times in response to customer feedback, including the implementation of helpful updates to the middle school program such as:

- Audio files for all science articles (providing access to students reading below grade level)

- Closed captioning and a downloadable script to every video (visually impaired)

- Printable PDFs of all assessments (to implement assessment accommodations)

Because the program can be delivered 100% digitally, users get to see improvements like those listed above immediately upon their release1. We believe this ability to continuously improve the program without requiring additional purchases or disrupting the teaching and learning experience is one of Amplify Science’s key assets. Indeed, the whole Amplify Science team looks forward to continuing to listen and respond to the needs of teachers and students, whether from direct user feedback or from efficacy research results.